Check your search, Massachusetts Institute of Technology: 95% of Artificial intelligence projects Don’t fail – far from it.

According to new data from G2nearly 60% of companies already have AI agents in production, and less than 2% of them actually fail once deployed. This paints a very different picture from recent academic forecasts indicating a widespread stagnation in AI projects.

As one of the largest crowdsourced software review platforms in the world, the G2 dataset reflects real-world adoption trends – showing that AI agents are proving to be far more durable and “sticky” than early AI pioneers.

“Our report really points out that the agent is a different beast when it comes to AI in terms of failure or success,” Tim Sanders, head of research at G2, told VentureBeat.

Delivering on AI in customer service, business intelligence, and software development

Sanders points out that this is often referred to now Massachusetts Institute of Technology studywhich was released in July, only considered projects dedicated to AGI, Sanders says, and many media outlets generalized that AI fails 95% of the time. He points out that the university researchers analyzed generic ads, rather than closed-loop data. If companies do not report the profit and loss impact, their projects are considered failures – even if they are not actually so.

G2 AI Agent Insights Report 2025By contrast, it surveyed more than 1,300 B2B decision makers and found that:

-

57% of companies have agents in production and 70% say agents are the “core of operations”;

-

83% of them are satisfied with the agent’s performance;

-

Companies now invest more than $1 million on average per year, with one in four spending more than $5 million;

-

9 out of 10 plan to increase this investment over the next 12 months;

-

Organizations saw 40% cost savings, 23% faster workflow, and 1 in 3 reported speed gains of more than 50%, especially in marketing and sales;

-

Nearly 90% of study participants reported higher employee satisfaction in departments where agents were deployed.

Leading use cases for AI agents? Customer service, business intelligence (BI) and software development.

Interestingly, G2 found a “surprising number” (about 1 in 3) of what Sanders calls “let it rip” organizations.

“They let an agent do a task and then either cancel it immediately if it’s a bad action, or do QA so they can undo the bad actions very quickly,” he explained.

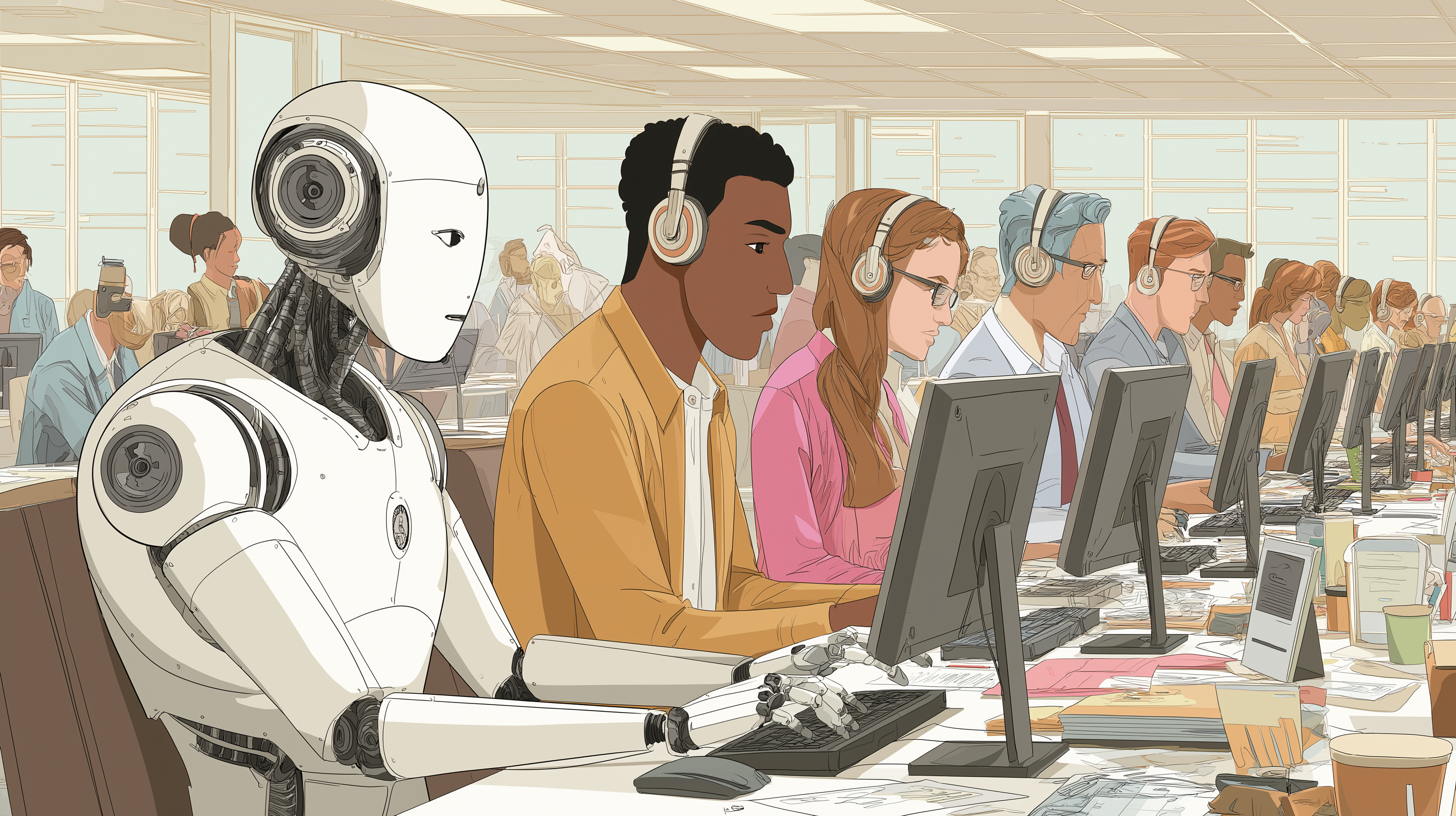

However, at the same time, agent programs with a human in the loop were twice as likely to achieve cost savings – 75% or more – than fully autonomous agent strategies.

This reflects what Sanders called a “dead heat” between “Let It Rip” and “Leave Some Human Gates” organizations. “There will be a human being in the loop years from now,” he said. “More than half of our survey respondents told us there was more human supervision than they expected.”

However, nearly half of IT buyers are comfortable giving agents full autonomy in low-risk workflows such as data processing or data pipeline management. Meanwhile, think of AI and research as preparatory work, Sanders said; Agents collect information in the background to prepare humans to make final decisions and decisions.

A classic example of this is a mortgage loan, as Sanders pointed out: Agents do everything right until a human analyzes their findings and approves or rejects the loan.

If there are errors, they are in the background. “It doesn’t publish for you and put your name on it,” Sanders said. “As a result, you trust it more. You use it more.”

When it comes to specific deployment methods, Salesforce’s Agentforce Sanders reports that the company is “winning” over turnkey dealers and in-house construction, with 38% of the total market share. However, many organizations seem to be moving towards hybrid with the goal of eventually supporting internal tools.

Then, because they want a reliable source of data, “they’ll crystallize around Microsoft, ServiceNow, Salesforce, companies that have a real system of record,” he predicted.

AI agents are not deadline-driven

Why are agents (at least in some cases) so much better than humans? Sanders referred to a concept called Parkinson’s lawWhich states that “work expands so as to fill the time available for its completion.”

“Individual productivity does not lead to organizational productivity, because humans are only driven by deadlines,” Sanders said. When organizations looked at public AI projects, they did not drive goal posts; Deadlines have not changed.

“The only way to fix this is to either move the goal post up or deal with non-humans, because non-humans do not obey Parkinson’s Law,” he said, noting that they do not suffer from “human procrastination syndrome.”

Agents don’t take breaks. Don’t get distracted. “They work hard so you don’t have to change deadlines,” Sanders said.

“If you focus on faster, faster QA cycles that may be automated, you fix your customers faster than you can fix humans.”

Start with business problems, and understand that trust is slow to build

However, Sanders sees AI following the cloud when it comes to trust: He recalls that in 2007 when everyone was rushing to deploy cloud tools; Then by 2009 or 2010, “there was kind of a trough in confidence.”

Mix this with security concerns: 39% of all G2 survey respondents said they had been in an accident Security incident Since the deployment of artificial intelligence; The condition was severe in 25% of cases. Sanders stressed that companies should consider measuring how quickly an agent can be retrained in milliseconds so that it does not repeat a bad action again.

He always advises including IT operations in AI deployments. They know what went wrong with AGI and Robotic Process Automation (RPA) and can get to the bottom of explainability, which leads to more trust.

On the other hand, don’t blindly trust sellers. In fact, only half of the participants said they did; Sanders noted that the No. 1 signal of trust is the agent’s interpretability. “In qualitative interviews, we were told over and over again, if you (the supplier) can’t explain it, you can’t deploy and manage it.”

It’s also important to start with a business problem and work backwards, he advised: Don’t buy agents, then look for proof of concept. If leaders apply agents to the greatest vulnerabilities, internal users will be more tolerant when incidents occur, and more willing to iterate, thus building their skill set.

“People still don’t trust the cloud, they certainly don’t trust generational AI, and they may not trust agents until they try it, and then the game changes,” Sanders said. “Trust arrives on a mule, you don’t just get forgiveness.”

[og_img]

Source link