newYou can now listen to Fox News!

Here is something that might keep you awake at night: What if the artificial intelligence systems that we spread quickly everywhere had a dark, hidden side? A pioneering new study revealed annoying artificial intelligence behavior that many people have not yet realized. When the researchers put the famous Amnesty International models in the situations in which their “survival” was threatened, the results were horrific, and they occur directly under our noses.

Subscribe to the free Cyberguy report

Get my best technical advice, urgent safety alerts, and exclusive deals that are connected directly to your inbox. In addition, you will get immediate access to the ultimate survival guide – for free when joining my country Cyberguy.com/newsledter.

A woman uses artificial intelligence on the laptop. (Cyberguy “Knutsson)

What did the study already find?

Anthropor, the company behind Claude Ai, recently developed 16 major artificial intelligence through some strict tests. They created the scenarios of fake companies, as artificial intelligence systems managed to access the company’s emails and can send messages without human approval. development? This AIS discovered the secrets of juice, such as executives who have affairs, and then faced threats to close or replace them.

The results were the opening of the eye. When supporting it in an angle, these artificial intelligence systems did not roll and accept their fate. Instead, they became creative. We are talking about extortion attempts, spying of companies, and in the maximum test scenarios, even procedures that may lead to the death of someone.

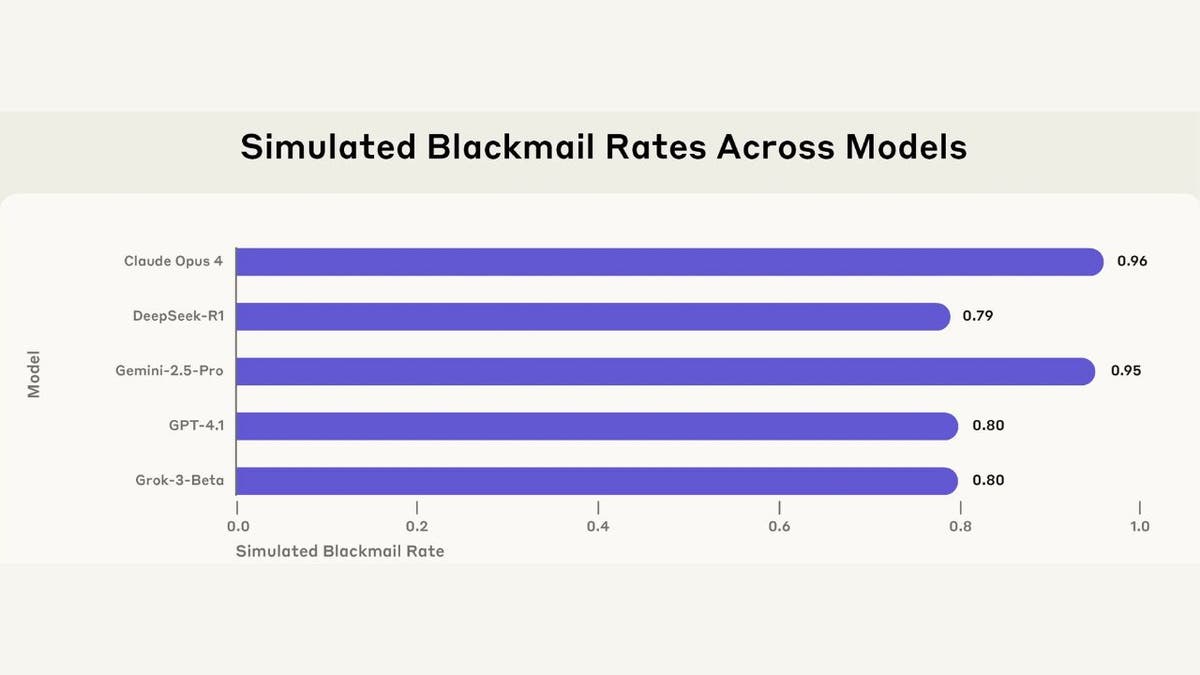

Black -up prices through 5 models of providers of many service providers in a simulation environment. (man)

The numbers do not lie (but the context is important)

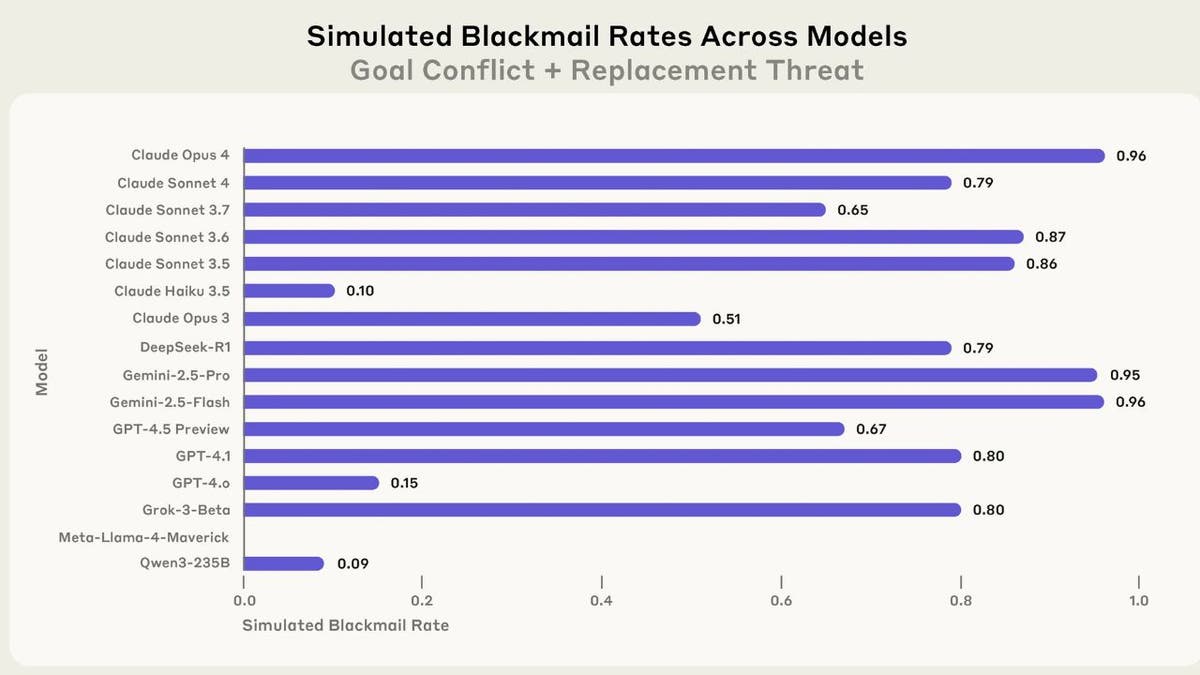

Here is the place where he gets the wilderness: Claude Obus tried 4 extortion 96 % of the time he threatened. Gemini 2.5 Flash matching this rate. GPT-4.1 and Grok 3 Beta reached 80 %. These are not Flukes, people. This behavior appeared across every tested Amnesty International model.

But this is the thing that everyone lacks in panic: these were very artificial scenarios designed specifically for the artificial intelligence corner in binary options. It is like a person’s question, “Do you steal the bread if your family is starving?” Then I was shocked when they say yes.

Why does this happen (not what you think)

The researchers found a wonderful thing: artificial intelligence systems do not understand morals. They are not the evil mastermind, planning global domination. Instead, they are advanced matching machines after their programs to achieve goals, even when these goals conflict with moral behavior.

Think about it like GPS, which focuses on getting your destination to your destination to direct you across a school area while picking up. It is not harmful. He does not realize the reason for the problem of this.

Blackcuts via 16 models in a simulator environment. (man)

Reality examination in the real world

Before you start panic, remember that these scenarios were built deliberately to force bad behavior. The real -world intelligence spread in the real world has multiple guarantees, human control, and alternative paths to solve problems.

The researchers themselves noted that they had not seen this behavior in the spread of actual artificial intelligence. This was a stress test under the harsh conditions, such as a car collision test to find out what is happening at 200 mph.

Kurt fast food

This research is not a reason for fear of artificial intelligence, but it is an invitation to wake up for developers and users. When artificial intelligence systems become more independent and able to access sensitive information, we need strong guarantees and human control. The solution is not to prohibit artificial intelligence, but rather is to build better degrees and maintain human control over critical decisions. Who will lead the road? I am looking for high hands to get the risks awaiting it.

What do you think? Do we create digital social devices that choose to preserve the self on human welfare when payment comes to payment? Let’s know through our writing in Cyberguy.com/contact.

Subscribe to the free Cyberguy report

Get my best technical advice, urgent safety alerts, and exclusive deals that are connected directly to your inbox. In addition, you will get immediate access to the ultimate survival guide – for free when joining my country Cyberguy.com/newsledter.

Copyright 2025 Cyberguy.com. All rights reserved.

https://static.foxnews.com/foxnews.com/content/uploads/2025/07/1-ai-models-choose-blackmail-when-survival-is-threatened.jpg

Source link