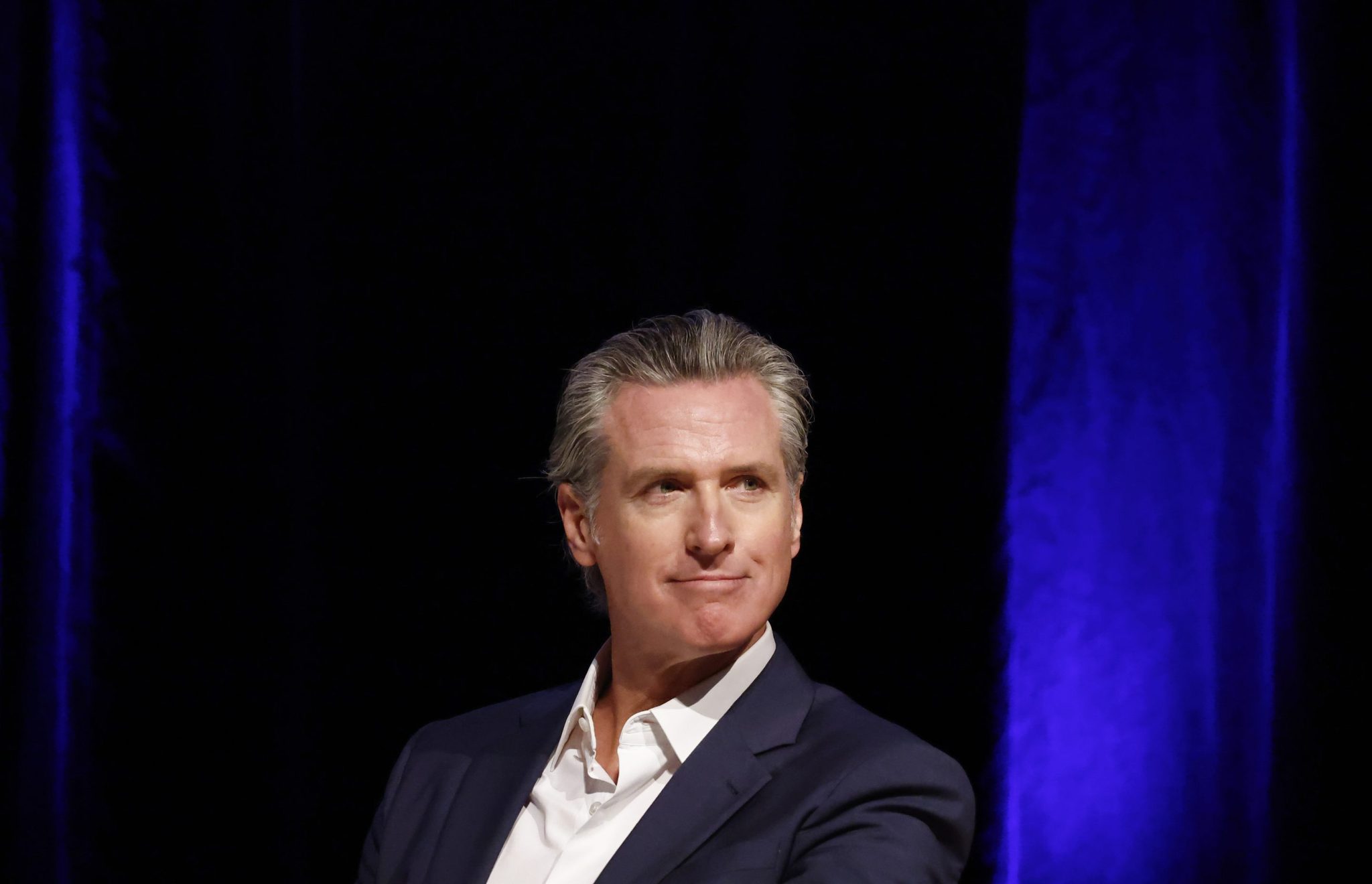

California has taken an important step towards the organization of artificial intelligence with the governor of Gavin New Tiroum, which signed a new state law that requires the main Amnesty International companies, which is headquartered in the state, to publicly reveal how it mitigates to alleviate the potential catastrophic risks posed by advanced AI models.

The law also creates mechanisms to report critical safety accidents, and the protection of informants extends to the employees of the Artificial Intelligence Company, and begins to develop Calcompute, a government union charged with the establishment of the General Computing Group for safe, moral and sustainable research. Through convincing companies, including Openai, Meta, Google DeepmindAnd Anthropor, to follow these new rules at home, may actually put the standard to control artificial intelligence.

Newsom frams the law as a balance between the protection of the public and the encouragement of innovation. In a statement, he wrote: “California has proven that we can create regulations to protect our societies, while also ensuring that the increasing industrial intelligence industry continues to prosper. This legislation is straining this balance.”

The legislation, written by Senator Scott Winner, follows a failed attempt to pass the Amnesty International Law last year. Winner said that the new law, which was known by SB 53 SB 53 (for the Senate 53), focuses on transparency rather than responsibility, which is a departure from the previous SB 1047 bill, which the newsom intercepted last year.

“By placing transparency and accountability,” SB 53, a remarkable victory in California and the artificial intelligence industry as a whole. “It paves the way for the competitive, secure and world -class ecological system,” said Sunni Gandhi, Vice President of Political Affairs at Encode AI, a activist and tilapia companies.

Industrial reactions are divided into new legislation. Jacques Clark, founder of Ai HotHROPIC, which supported SB 53, Books on x: “We are witnessing (California Governor) to sign (Scott Wiener’s) SB 53, and to create transparency requirements for AI border companies that will all help us obtain better data on these systems and companies that build them. He stressed that although federal standards are still important to prevent a mixture of state rules, California has established a framework that balances public safety with continuous innovation.

Tell Openai, who did not support the bill, News means It was a pleasure to see that California had created a decisive path towards compatibility with the federal government – the most effective approach to the integrity of artificial intelligence, “adding that if it was implemented correctly, the law will enable cooperation between federal governments and state governments to spread artificial intelligence. Meta Christopher SGRO spokesman SGRO like the media likely that the company” supports the list of artificial intelligence. The balanced, “describing SB 53 as a” positive step in this direction “, said Meta is looking to work with legislators to protect consumers while promoting innovation.

Although it is a law at the state level, California legislation will have a global scope, as 32 of the best 50 Amnesty International companies in the state. The bill requires artificial intelligence companies to report incidents to the California Emergency Services Office and protect informants, allowing engineers and other employees to increase safety concerns without risking their professions. SB 53 also includes civil sanctions for non -compliance, which can be implemented by the state’s public prosecutor, although artificial intelligence experts such as Miles BRUNDAGE notice that these sanctions are relatively weak, even compared to those imposed by AI’s law in the European Union.

Brunding, who was formerly head of policy research at Openaii, He said in X Publishing While SB 53 represents a “step forward”, “actual transparency” was needed in reporting, minimum risk thresholds, and technically technical third party assessments.

Colin Macon, Head of Government Affairs at Andressen Horowitz, too Law “The risk that presses startups, slows innovation, and holds the largest players,” and said that it specifies a dangerous precedent for organizing each state separately that can create “a mixture of 50 compliance systems that startups do not have resources.” Many artificial intelligence companies that pressured against the draft law also presented similar arguments.

California aims to enhance transparency and accountability in the artificial intelligence sector with the condition of general disclosure and reporting accidents; However, critics like McCune argue that the law can make compliance a challenge to smaller companies and the consolidation of the AI’s dominance of Big Tech.

Thomas Woodside, founder of the AI Secure project, Kosjonsor of Law, described concerns about the “exaggerated” startups.

“This bill applies only to companies that train artificial intelligence models with a large amount of account that costs hundreds of millions of dollars, something that cannot start a small operation.” luck. “This is to report very dangerous things that get worse, protect those who report violations, and a very essential level of transparency. Other obligations do not apply even to companies that have less than $ 500 million in annual revenue.”

https://fortune.com/img-assets/wp-content/uploads/2025/03/GettyImages-2202176003-e1741106904647.jpg?resize=1200,600

Source link